Blog 2: The AI Chip Race

“The AI chip race is forging the path to the mysterious realm of Artificial General Intelligence (AGI)”

Introduction

Advancements in AI chip architectures fundamentally drive the pursuit of an elusive future of Artificial General Intelligence (AGI). As AI models scale up in parameter count, dataset size, and algorithmic complexity, the need for efficient computing power becomes a critical bottleneck. General-purpose CPUs are increasingly inadequate for the speed and accuracy required in highly parallelized deep-learning and training tasks. This leads to a need for specialized AI chips like GPUs, TPUs, and custom ASICs that offer superior parallel processing capabilities for training, modeling, and inference.

Based on market estimation, Nvidia controls about ~85%, AMD has about ~10% of the market share and the rest 5% is shared across many chip manufacturers. End customers are left with very little bargaining power with vendor lock-in and a lack of options and variety. This has prompted the rest of the players to have various unique value propositions that promise to carve out a niche of the remaining 5% and look to extend their share in this massive ballooning opportunity.

Hyperscalers such as Microsoft with OpenAI, Meta, Amazon, and Google are investing in the design of their proprietary AI processors, a process that is deemed to take several years but promises significant performance gains. The main roadblock to broader adoption is the efficiency of the hardware designs managing speed, efficiency, thermal, power, and cooling costs in addition to the software similar to CUDA. AMD and Intel are both participating in a big industry group called the UXL foundation, which includes Google which is working to create free alternatives to Nvidia’s CUDA for controlling hardware for AI applications.

These custom chips aim to meet the massive computational demands of AI by incorporating advanced features:

High-Bandwidth Memory (HBM): Rapid data access and reduced latency, critical for handling large datasets and high throughput.

Low-Latency Interconnects: Technologies such as NVLink and InfiniBand enable high-speed communication between processing units, reducing bottlenecks in distributed computing environments.

High-speed and low latency storage: Massive repository data sets are needed for training on reliable, capacity-optimized all-flash storage.

Tensor Cores: Specialized units designed to perform tensor/matrix operations efficiently, crucial for deep learning tasks.

Mixed-Precision Arithmetic (FP16, INT8): Reduces computational load while maintaining model accuracy, optimizing performance per watt.

The leading AI chip architectures integrate these hardware accelerators with optimized software libraries and frameworks such as CUDA, cuDNN, TensorFlow, and PyTorch, ensuring maximum computational efficiency. These frameworks leverage advanced technologies to optimize two major deep-learning operations.

Parallel Computing: Utilizing multiple processing units to perform simultaneous calculations, drastically reducing training and inference times.

Hardware-Software Co-Design: Tight integration between hardware capabilities and software optimizations to exploit specific chip features fully.

Megacap Players

Nvidia

Nvidia just launched their next-generation GPU family Blackwell which presents six cutting-edge technologies for AI training and real-time LLM inference scaling up to 10 trillion parameters. The Blackwell-architecture GPUs feature 208 billion transistors, leveraging a custom 4nm TSMC process and integrating two-reticle limit GPU dies via a 10 TB/s chip-to-chip interconnect. The second-generation transformer engine introduces micro-tensor scaling and 4-bit floating point AI inference, doubling compute capacity. Fifth-generation NVLink offers 1.8 TB/s bidirectional throughput per GPU, facilitating high-speed inter-GPU communication for up to 576 GPUs. The RAS engine provides reliability, availability, and serviceability enhancements with AI-driven diagnostics. Secure AI capabilities include advanced encryption protocols, and the decompression engine accelerates data analytics by supporting the latest formats.

Advanced Micro Devices(AMD)

The upcoming AMD Instinct MI350 GPUs, scheduled to go into production in 2025, will incorporate the advanced CDNA 4 architecture, delivering a substantial increase in AI inference performance up to 35 times that of the MI300 series. These GPUs will be equipped with high-bandwidth memory, likely utilizing HBM3E or its successor, to support the massive data throughput required for advanced AI and machine learning workloads. The MI350 series will also feature improvements in energy efficiency and computational density, making them highly suitable for large-scale deployments in data centers. These enhancements include optimized tensor cores for accelerated deep learning operations and increased parallelism to handle complex neural network models. The architectural improvements will allow the MI350 GPUs to efficiently manage the computational demands of next-generation AI applications, providing a significant boost in processing capabilities and scalability. A notable mention to AMD Ryzen 9000 Series processors for desktop PCs have built a reputation to be the world’s most advanced and powerful option for gamers and professionals but not meant for data center scale.

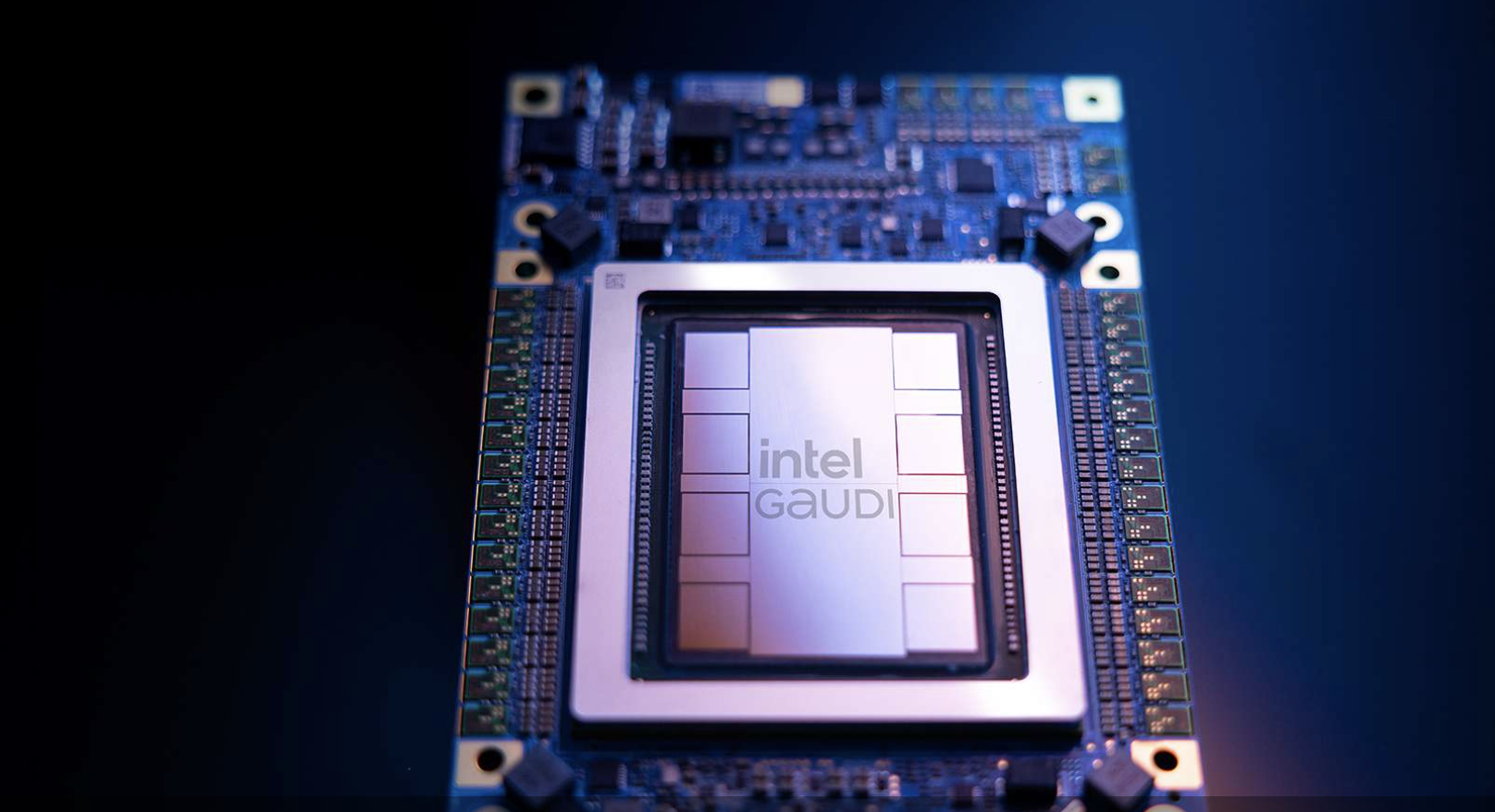

Intel

Intel's Gaudi 3 GPUs, designed using a 5nm manufacturing process, are made for processing next-generation AI workloads, featuring significant architectural enhancements over their predecessors. Each Gaudi 3 GPU comprises two identical dies, each containing four matrix math engines, 32 fifth-generation Tensor Processor Cores (TPCs), and 48 MB of SRAM, resulting in a total of eight matrix math engines, 64 TPCs, and 96 MB of SRAM per GPU. The chips are interconnected using a high-bandwidth interface, enabling them to function as a single device from a software perspective.

Gaudi 3 GPUs boast 128 GB of high-bandwidth memory (HBM), providing a memory bandwidth of 3.7 TB/s, and support for 16 lanes of PCIe 5.0 connectivity. A standout feature is the integration of 24 200-Gigabit Ethernet (GbE) ports, utilizing RDMA over Converged Ethernet (RoCE) technology, which facilitates high-speed, low-latency communication between GPUs within a server and across multiple nodes.

Gaudi 3's scalability is another key feature, supporting configurations from single-node setups with eight accelerators to multi-node clusters with thousands of GPUs. A single node can achieve 14.7 petaflops of FP8 compute performance, scaling up to 15 exaflops in a 1,024-node cluster, demonstrating the GPU's capability to handle massive AI workloads efficiently. These technical advancements make Gaudi 3 GPUs a formidable competitor in the AI accelerator market, providing high performance, scalability, and efficiency for deep learning applications.

Alphabet/Google

Google's sixth-generation Trillium Tensor Processing Units (TPUs), based on advanced AI hardware architecture, deliver a 4.7x increase in peak compute performance compared to previous TPUs. Each Trillium TPU is equipped with enhanced matrix multiplication units (MXUs), featuring an increased number of units and higher clock speeds to handle intensive AI computations more efficiently. The memory subsystem has been significantly upgraded, with each TPU incorporating 128 GB of high-bandwidth memory (HBM) providing 3.7 TB/s of memory bandwidth, which is double that of the previous generation. This increased bandwidth facilitates faster data transfer and processing, crucial for training large-scale AI models.

The interconnect technology has also been enhanced, with double the interchip interconnect (ICI) bandwidth, enabling faster communication between TPUs within a pod. Trillium TPUs include third-generation SparseCore technology, which optimizes the processing of sparse data commonly found in advanced ranking and recommendation systems. This results in more efficient handling of large-scale embeddings and other sparse data structures.

Energy efficiency has been significantly improved, with Trillium TPUs achieving 67% greater energy efficiency than their predecessors. This is partly due to the optimized architecture and partly due to the advanced power management techniques employed. These TPUs can scale up to 256 units in a single high-bandwidth, low-latency pod configuration, and further scale to thousands of TPUs across multiple pods, interconnected by a multi-petabit-per-second datacenter network.

Trillium TPUs are integral to Google’s AI Hypercomputer, designed to support the next wave of foundation models and AI agents with reduced latency and lower operational costs. This architecture supports training and serving long-context, multimodal models, providing a substantial boost to Google DeepMind’s Gemini models and other large-scale AI applications.

Amazon/AWS

The AWS Trainium2 is designed to deliver a significant leap in performance and efficiency for training AI models. Trainium 2 offers significant improvements over its predecessor, with up to 4x faster training performance, 3x the memory capacity, and 2x better efficiency. It features 96GB of high-bandwidth memory and supports various data types including FP32, TF32, BF16, FP16, UINT8, and the new configurable FP8 (cFP8) data type providing a robust platform for large-scale AI training with improved latency and reduced overhead. Trainium 2 chips are manufactured using a 7nm process and are capable of delivering 650 teraFLOPS of FP16 performance per accelerator. These instances are scalable up to 100,000 chips in EC2 UltraClusters, enabling supercomputer-class performance for training models with up to 300 billion parameters in a fraction of the time required by previous generations.

Microsoft/Azure

The Microsoft Maia 100 AI accelerator is designed for high-performance AI workloads on the Azure cloud platform. The chip is built using a cutting-edge 5nm process and features a dense transistor count of 120 billion. It boasts a peak performance of 800 TFLOPS and integrates advanced tensor processing capabilities, optimized for deep learning and large-scale model training. The Maia 100 AI Accelerator aims to enhance the efficiency and scalability of AI services, supporting applications like Microsoft Copilot and the Azure OpenAI Service, thus positioning Microsoft as a significant player in AI infrastructure. This development marks Microsoft's strategic move to reduce reliance on external vendors like NVIDIA, aligning with its goal to optimize AI infrastructure for its extensive ecosystem, including applications like Copilot and Azure OpenAI. Additionally, it includes a specialized liquid cooling system to handle the thermal demands of complex AI computations, ensuring high efficiency and performance within Microsoft's existing data center infrastructure.

Meta

The Meta Training and Inference Accelerator (MTIA) v2 is an advanced AI chip designed to optimize Meta's AI workloads, specifically focusing on ranking and recommendation models. The MTIA v2 is built using a 7nm process technology, enhancing transistor density and energy efficiency. It features an 8x8 grid of processing elements (PEs), significantly boosting dense compute performance by 3.5x and sparse compute performance by 7x compared to the first generation.

Operating at a clock speed of 1.35 GHz, the MTIA v2 consumes 90 watts of power, ensuring high efficiency. The accelerator integrates 256 MB of on-chip SRAM, doubling its predecessor's memory capacity, and enabling better data handling and faster processing speeds. This architecture is designed to achieve an optimal balance of compute, memory bandwidth, and memory capacity, specifically tailored for handling large-scale AI models with low latency and high throughput.

The chip's performance enhancements are evident in Meta's internal testing, where the MTIA v2 claims to have shown a 3x improvement over the first-generation chip across key models and a 6x increase in model serving throughput at the platform level. Additionally, the MTIA v2 integrates seamlessly with Meta's AI software stack, including PyTorch 2.0 and the Triton-MTIA compiler backend, which optimizes high-performance code generation for the MTIA hardware, further enhancing developer productivity and system efficiency.

Qualcomm

Qualcomm's Adreno GPUs, such as the Adreno GPUs, are integrated into the Snapdragon 8 Gen 3 platform. These GPUs are built using TSMC's advanced 4nm process technology. The Adreno 750 features significant performance improvements over its predecessors, offering up to 25% faster graphics rendering and improved power efficiency. It includes over 10 billion transistors, delivering a substantial boost in computational power. The GPU supports high frame rates and advanced graphics features, making it ideal for demanding mobile applications and gaming. Additionally, it provides enhanced AI capabilities and optimized power consumption for extended battery life in mobile devices but it’s highly unlikely that these can be integrated in large-scale data center environments.

Source: https://www.qualcomm.com/products/features/adreno

Qualcomm has invested in high-performance and cost-optimized cloud AI inferencing for generative AI. The Qualcomm Cloud AI 100 Ultra is an advanced AI inference processor, meticulously designed for high-efficiency deployment in data centers, targeting generative AI and large language model (LLM) applications. This processor is fabricated using TSMC's 7nm process technology, ensuring a high transistor density with enhanced performance and energy efficiency.

The AI 100 Ultra features over 10 billion transistors, supporting a range of data precisions, including INT8, INT16, FP16, and FP32, making it versatile for diverse AI workloads. It achieves an impressive 400 TOPS (Tera Operations Per Second) of INT8 performance, crucial for high-speed AI inferencing tasks. The chip incorporates a substantial 144MB on-chip SRAM cache, reducing latency by minimizing the need for frequent access to external memory, which is complemented by four 64-bit LPDDR4X memory controllers capable of 134GB/s bandwidth, optimizing data throughput.

One of the notable features of the AI 100 Ultra is its power efficiency, operating at 150W for single-card configurations. It can efficiently handle AI models with up to 100 billion parameters on a single card, scaling to 175 billion parameters with dual-card setups. Multiple AI 100 Ultra cards can be interconnected for larger and more complex AI models, facilitated by the Qualcomm AI Stack and Cloud AI SDK, which streamline model porting and optimization processes.

The AI 100 Ultra supports PCIe 4.0 x8 for high-speed connectivity, ensuring rapid data transfer rates between the AI accelerator and host system. Its architecture includes advanced tensor processing cores and a custom networking protocol that provides scalable and efficient performance for large-scale AI deployments, making it an ideal solution for AI-intensive applications in cloud data centers.

IBM

The IBM NorthPole AI Accelerator is a highly specialized AI inference chip designed on a 12nm process, incorporating 22 billion transistors within an 800 mm² die. This architecture features a 256-core array with 192MB of distributed SRAM, achieving over 200 TOPS at 8-bit precision, 400 TOPS at 4-bit, and 800 TOPS at 2-bit precision, all at a nominal 400MHz frequency. It is designed for low-precision operations, making it suitable for a wide range of AI applications while eliminating the need for bulky cooling systems. It leverages local memory and network-on-chip (NoC) technology for efficient data handling, resulting in exceptional performance for inference tasks, but not suitable for training large language models(LLMs).

Apple

The Apple M4 chip is an advanced AI accelerator built on TSMC's 3nm process, technology incorporating around 40 billion transistors. It features a hybrid architecture with 10 CPU cores comprising 4 high-performance cores and 6 efficiency cores, running at a clock speed of up to 3.5 GHz. The GPU consists of 12 cores, providing a peak performance of 7.5 TFLOPS, making it adept at handling intensive graphical and computational tasks.

The M4 includes a 16-core Neural Engine capable of executing 38 trillion operations per second (TOPS), significantly boosting the performance of machine learning and AI tasks. This Neural Engine is optimized for both high throughput and low latency, facilitating real-time AI inference. Additionally, the chip supports LPDDR5X memory with a bandwidth of up to 100 GB/s, ensuring rapid data processing and efficient memory utilization.

For enhanced graphics capabilities, the M4 integrates hardware-accelerated ray tracing and supports up to 6K resolution on external displays. It also features a dedicated video encoder and decoder supporting H.265 and AV1, allowing for efficient media processing. The chip's advanced power management and thermal design ensure sustained performance while maintaining energy efficiency, making it ideal for use in high-end laptops and desktops aimed at both professional and consumer markets but not intended for data center applications.

Unicorn Startups

SambaNova Systems

The SambaNova SN40L AI accelerator is engineered for high-performance AI workloads, leveraging advanced hardware and memory architecture. It is built on a dual-chip design, featuring a 7nm process node and integrating 1,040 compute cores. The SN40L utilizes a three-tier memory hierarchy, 64GB of HBM3 high-bandwidth memory, 1.5TB of DDR5 memory, and on-chip SRAM. This configuration allows for efficient handling of large AI models and high-speed data processing.

The device supports a range of AI tasks, including large language models and automated speech recognition, making it the best fit for enterprise-scale AI deployments. SambaNova delivers a full-stack platform, purpose-built for generative AI. The SN40L is at the heart of the SambaNova platform and uses a design to offer both dense and sparse computing and to include both large and fast memory, making it a truly intelligent chip. The greatest value proposition of SN40L is that it’s easily able to perform both training and inference on a single platform, eliminating the redundancy of legacy systems and powering the largest, multi-trillion parameter models that enable enterprise-grade generative AI. SambaNova's approach to managing supply chain challenges and positioning itself in the competitive AI market emphasizes the importance of having a complete platform solution, from hardware to software, to stand out in the crowded AI accelerators space.

Cerebras Systems

The Cerebras Systems CS-3 AI accelerator represents a significant leap in AI hardware, leveraging its unique Wafer Scale Engine (WSE) architecture. The CS-3 is constructed on a 7nm process and boasts of having 4 trillion transistors, making it the world's largest AI chip. This massive die integrates 900,000 AI-optimized cores, each designed to efficiently handle sparse and dense linear algebra operations essential for AI workloads without having to do much acrobatics around GPU parallelism techniques like model parallelism, data parallelism, and tensor parallelism.

The CS-3 operates at 2.6 petaflops of mixed-precision performance per chip, supporting extensive parallel processing capabilities. Its advanced interconnect and memory fabric enables high-speed communication between cores, minimizing latency and maximizing throughput. The architecture supports up to 1,200 TB of memory in a single system, allowing for the handling of enormous AI models with up to 24 trillion parameters.

Furthermore, the CS-3 can scale across multiple systems, with the potential to form clusters of up to 2,048 interconnected units, collectively delivering up to 256 exaflops of AI performance. This scalability is facilitated by a high-bandwidth, low-latency network that ensures efficient data distribution across the cluster, making it ideal for both training and inference of large-scale AI models.

This design decouples memory and compute resources, optimizing power efficiency and simplifying the software stack required for AI development. The CS-3's innovative approach significantly reduces the code complexity compared to traditional GPU-based systems, enabling faster deployment and iteration of AI models.

Groq

The Groq AI Accelerator, specifically the Groq LPU (Language Processing Unit), is specialized for high-performance AI inference tasks. Built on TSMC's 7nm process technology, the LPU incorporates over 20 billion transistors, optimizing power efficiency and computational throughput. This chip is designed with a unique, massively parallel architecture that consists of thousands of independent processing units, enabling it to handle large-scale AI models efficiently.

The Groq LPU operates at a clock speed of 1.2 GHz and can achieve up to 1 PetaOPS (Peta Operations Per Second) in peak performance for 8-bit integer operations. It supports a wide range of data precisions, including INT8, FP16, and BFLOAT16, providing versatility for various AI workloads. The chip features 128MB of on-chip SRAM, significantly reducing data access latency and improving overall processing speed.

One of the key innovations of the Groq LPU is its deterministic processing model, which eliminates the need for complex scheduling and load balancing typically required in traditional GPU architectures. This deterministic approach ensures predictable and consistent performance, which is critical for real-time AI applications.

The LPU is designed to be highly scalable, allowing multiple units to be interconnected seamlessly via high-speed, low-latency links, facilitating the deployment of large AI clusters. This scalability is further enhanced by Groq's proprietary interconnect technology, which provides efficient data transfer between LPUs, minimizing bottlenecks and maximizing throughput. In terms of power efficiency, the Groq LPU demonstrates a superior performance-per-watt ratio compared to conventional GPUs, making it an attractive solution for data centers looking to optimize both performance and energy consumption.

Source: https://wow.groq.com/why-groq/

Mythic

The Mythic M2000 AI Accelerator, built on a 40nm process, leverages Mythic's proprietary Analog Matrix Processor (AMP) technology to achieve significant improvements in power efficiency and performance. The M2000 integrates 108 AMP tiles, each containing a Mythic Analog Compute Engine (ACE) that includes an array of flash cells and ADCs (Analog-to-Digital Converters), a 32-bit RISC-V nano-processor, SIMD vector engine, SRAM, and a high-throughput Network-on-Chip (NoC) router. This design enables the M2000 to perform matrix multiplications directly within the memory arrays, significantly reducing latency and increasing throughput.

Technically, the M2000 can deliver up to 35 TOPS (Tera Operations Per Second) while maintaining a typical power consumption of approximately 4 watts. This power efficiency is achieved by eliminating the need for external DRAM or SRAM, instead utilizing on-chip memory to handle AI workloads efficiently. The chip's architecture supports high-speed PCIe 2.0 interfaces for integration with host processors, enabling seamless data transfer and efficient execution of high compute-requiring models with extremely low latency.

The Mythic M2000's analog compute-in-memory approach allows for significant cost advantages, providing up to 10 times cost efficiency over comparable digital architectures. This makes it an ideal solution for edge AI applications, where power efficiency, cost, and performance are critical. The M2000's ability to handle large-scale AI models with low latency and high efficiency positions it as a key player in the AI accelerator market.

Source: https://mythic.ai/product/

d-Matrix

The d-Matrix Corsair C8 AI Accelerator is built on digital-in-memory computer (DIMC) technology and utilizes a chipset-based architecture featuring digital in-memory computing (IMC) to efficiently run transformer-based inference models featuring 2,048 DIMCs supported by 256 GB of LPDDR5 RAM and 2 GB of SRAM. Fabricated using a 6nm process, it claims to achieve a performance of up to 9600 TFLOPS, to be nine times faster than Nvidia's H100 GPU in GenAI workloads. This accelerator is designed to handle complex AI tasks with exceptional speed and efficiency, providing a significant boost in performance for large-scale AI models and applications.

Source: https://www.d-matrix.ai/product/

Tenstorrent

The Tenstorrent Grayskull AI Accelerator is an advanced RISC-V-based solution designed to speed up inference by handling sparse and dynamic computation patterns which are common in contemporary AI models. It features an architecture leveraging 96 Tensix cores on the Grayskull e75 model and 120 Tensix cores on the e150 model. These cores operate at frequencies of 1 GHz and 1.2 GHz respectively.

Tenstorrent’s processor cores are called Tensix Cores. Each Tensix Core includes five RISC-V processors, an array math unit for tensor operations, a SIMD unit for vector operations, hardware for accelerating network packet operations and compression/decompression, and up to 1.5 MB of SRAM)

The Grayskull accelerator utilizes a standard LPDDR4 DRAM interface, housing 8 GB of memory, which provides a cost-effective alternative to HBM memory while maintaining high bandwidth and low latency. The e75 model consumes 75W of power, while the e150 model, designed for more intensive workloads, consumes 200W. Both models integrate seamlessly via a PCIe Gen 4 interface, providing high throughput for data transfer between the accelerator and the host system.

One of the key innovations in Grayskull is its ability to optimize AI workload execution by reducing data movement and increasing computational efficiency through its in-memory computing capabilities. This is particularly advantageous for running transformer models and other complex AI algorithms, ensuring optimal performance and energy efficiency. This design philosophy is aimed at achieving higher math-unit utilization, making Grayskull a powerful and scalable alternative to traditional GPU accelerators, especially for edge and data center applications that demand high performance and energy efficiency.

Future Prospects

The escalating demand for generative AI services continues to drive the development of more efficient and powerful AI chips.

Nvidia's latest Blackwell family of GPUs, with advancements in CUDA and tensor cores, offer exponential increases in AI processing capabilities. AMD’s AI-focused GPUs leverage innovations in RDNA architecture, while Intel’s acquisition of Habana Labs enhances their AI product offerings with purpose-built AI accelerators.

Here are other notable mentions in the advanced semiconductor landscape that will stand to play a major role in the mass adoption of AI technologies.

Summary

As the AI chip arms race intensifies, it is clear that these specialized AI accelerators will be crucial in reducing training and inference latencies, enhancing energy efficiency, and enabling the development of increasingly sophisticated AI platforms for model training development, deployments, and inference. This rapid evolution is pivotal for accelerating the path toward faster computing, where the synergy between hardware and software advancements will define the future of AI capabilities.

The entry of disruptive startups, with unique value propositions, has the potential to drive significant innovation and reshape the AI hardware landscape with more options and variety for end customers allowing them to optimize their platform needs that work best based on their budget, time, and accuracy of the applications.

References

https://www.theverge.com/2024/2/1/24058186/ai-chips-meta-microsoft-google-nvidia/archives/2

https://www.backblaze.com/blog/ai-101-training-vs-inference/

https://semiengineering.com/how-inferencing-differs-from-training-in-machine-learning-applications/

Disclaimer: All opinions shared on this blog are solely mine and do not reflect my past, present, or future employers. All information on this site is intended for entertainment purposes only and any data, graphics, and media used from external sources if not self-created will be cited in the references section. All reasonable efforts have been made to ensure the accuracy of all the content in the blog. This website may contain links to third-party websites which are not under the control of this blog and they do not indicate any explicit or implicit endorsement, approval, recommendation, or preferences of those third-party websites or the products and services provided on them. Any use of or access to those third-party websites or their products and services is solely at your own risk.